Happy holidays from ISC!

ISC is fortunate to have staff members in so many different countries around the world: our software development benefits from all the different perspectives - and we benefit personally!

Read postISC’s BIND DNS server software is renowned for its rich feature set and compliance with standards but not for its performance.

To allow us to focus on BIND’s performance we have recently invested significantly in new hardware in our test lab for dedicated 24/7 performance testing, and have also developed a new web-based system for automated performance tests.

Whilst we’ve had automated tests running in the past, they weren’t flexible, they didn’t run frequently enough, and the results were often skewed by high intrinsic variability. With this new system our developers can run on-demand tests against experimental code branches before merging those branches into our “master” code branch, and our QA staff can benchmark each planned release against previous versions to ensure that we are not inadvertently creating performance regressions.

The system maintains a list of build configurations that are each tested in turn, starting over again once every configuration has been tested. Instead of the single weekly run that we used to perform we now test each configuration two or three times daily. The system can be scaled horizontally by adding more test machines, allowing us to increase test capacity whilst maintaining centralised control.

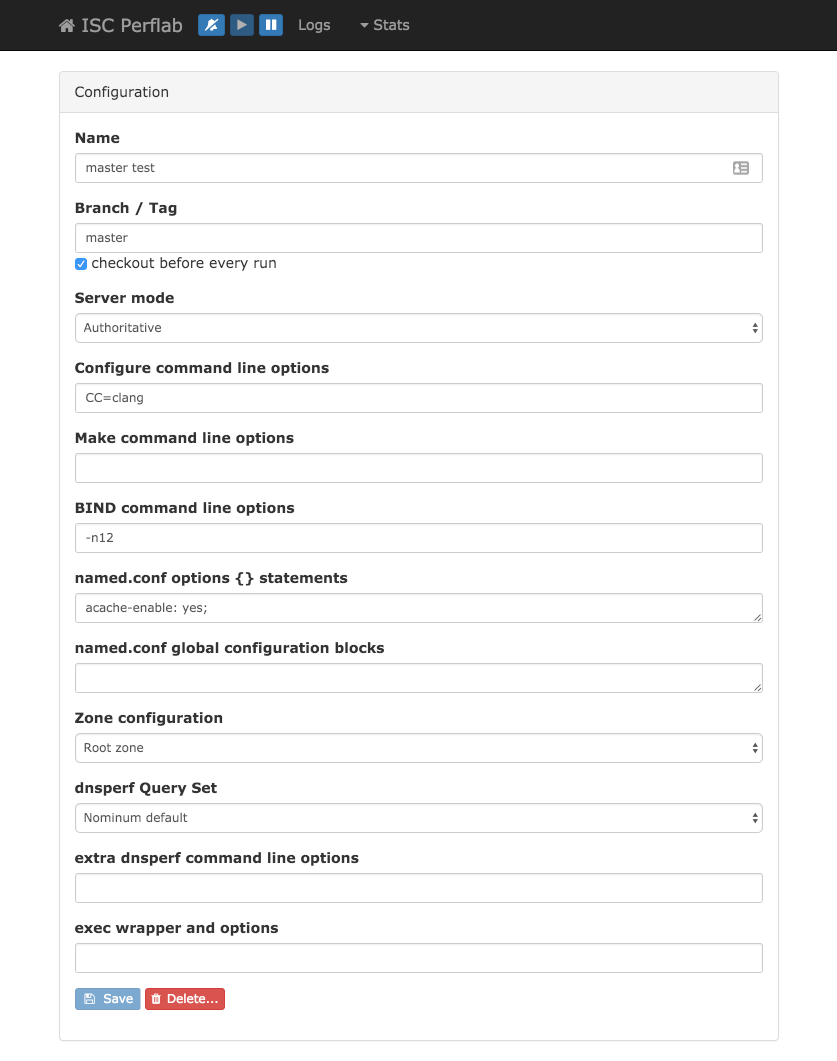

For each build configuration the users can set up a wide variety of options including which code branch (or tag) to extract from “git”, whether BIND is running as a recursive or authoritative server, etc.:

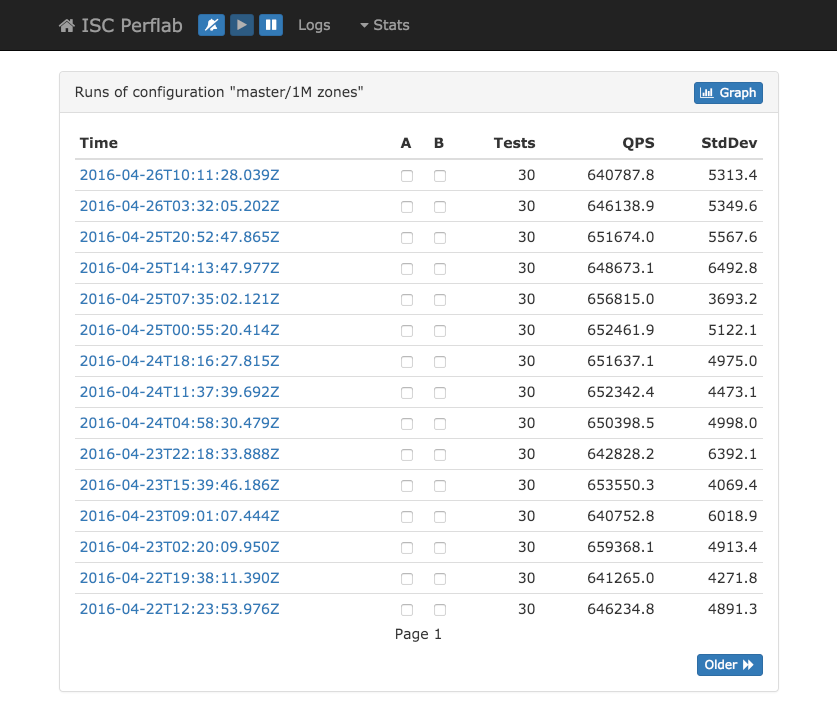

To measure average mean throughput we start BIND and then start up Nominum’s dnsperf on a separate server 30 times for 30 seconds at a time, calculating the mean and standard deviation over those runs. We ignore the first dnsperf run for statistical purposes so as to get a better picture of “steady state” performance without the figures being skewed by start-up effects such as filling the cache when running recursive tests.

Each configuration above also has options to control the set of zones to be loaded into the server (for authoritative tests) and the set of queries to be sent from “dnsperf”. To emulate various authoritative domain hosting models we have zone configurations such as:

For recursive tests we send queries that target a separate authoritative server that holds both the aforementioned single zone with 1M delegations and each of the 1M small zones referred to therein.

All build and test output is logged and captured for display and graphing and for statistical analysis. The screenshot below shows the historic results for one configuration. Users can drill down through this UI to see the complete dnsperf output for every test run. A snapshot of BIND’s memory usage is taken every five seconds and can be graphed too.

With this system in place we have been able to measure an 11% improvement in recursive performance with the introduction of “adaptive read/write locks” in BIND 9.11.0a1. We have also identified particular issues with BIND’s authoritative performance when returning a referral response (such as from the root zone or from a TLD) and our efforts are now concentrated on improving that. The tests on delegation zones have also showed the importance of the ‘acache-enable: yes’ setting for such zones, typically improving performance by 50%.

Work continues on this system to reduce some remaining variability in results to allow even very small changes in performance to be measured. We also plan to add support for testing our DHCP products (ISC dhcpd, and Kea).

Later updates to this BLOG post:

What's New from ISC